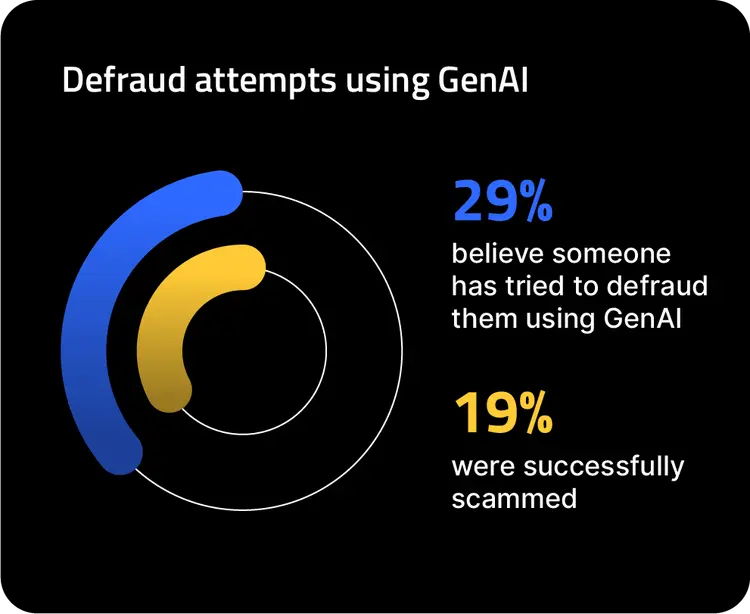

Artificial intelligence (AI) has rapidly emerged as one of the most transformative technologies of our time, reshaping the internet and the global economy. As AI’s capabilities expand, it’s caught the eye of fraudsters looking to exploit its potential for their own illicit gain.

Cybercriminals are leveraging generative AI tools like ChatGPT to conduct increasingly sophisticated and scalable fraud attacks against both individuals and businesses. The technology’s ability to generate flawless text, code, realistic audio, images, videos, and even entire websites, makes it a powerful tool in the hands of malicious actors. For companies in today’s digital market, the only way to keep up is to follow suit, and fight AI-fueled fraud with advanced, AI-powered technology.