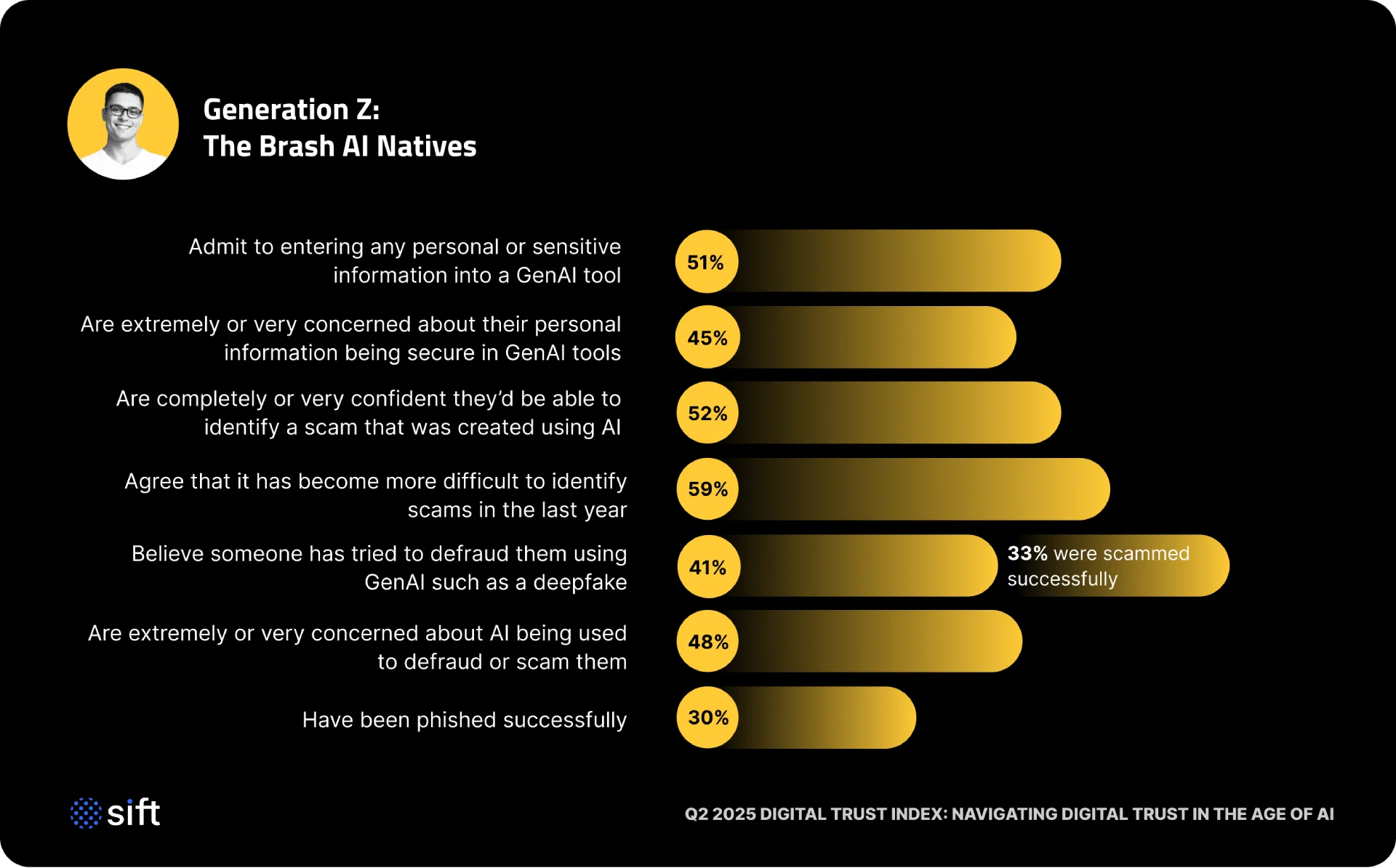

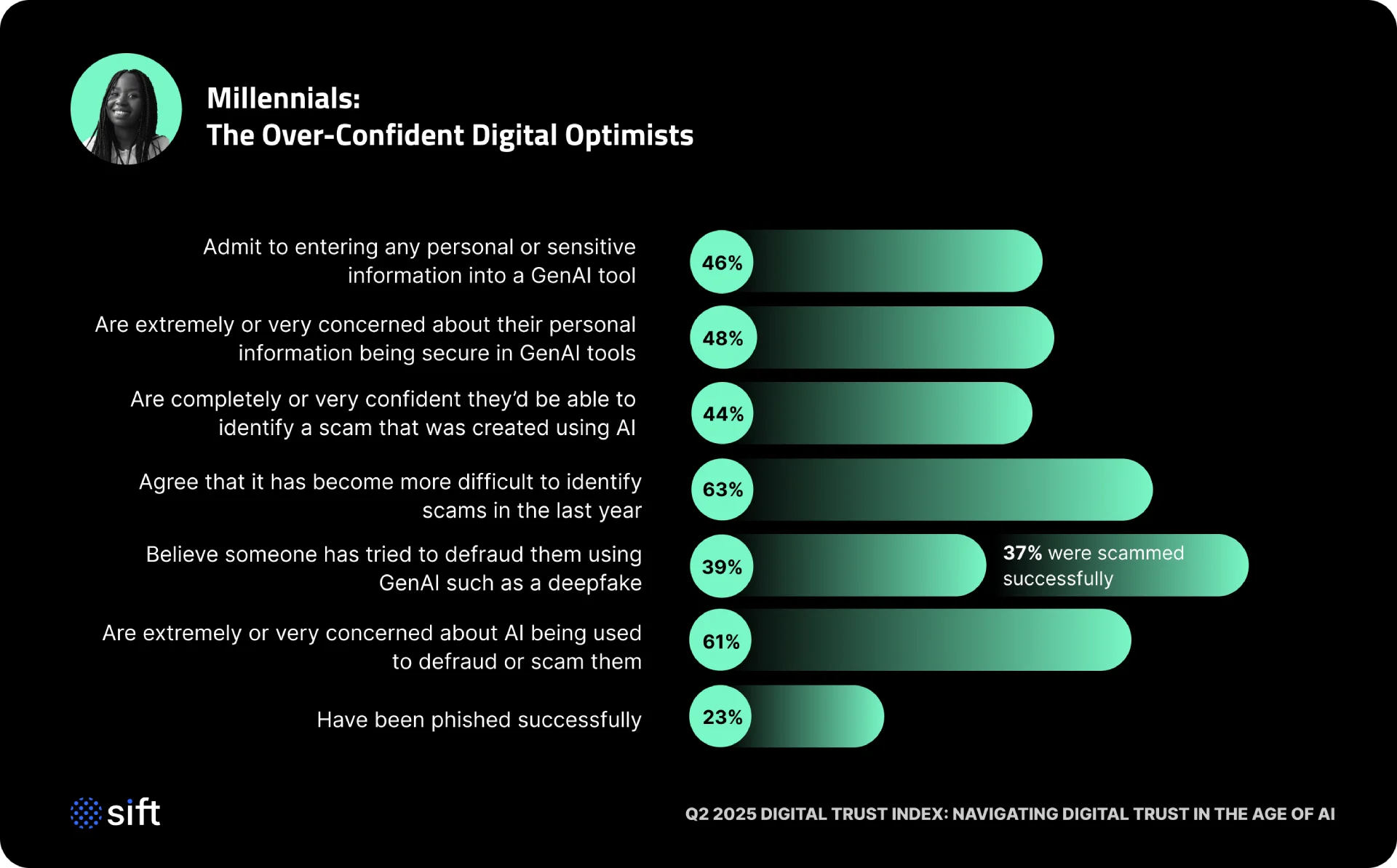

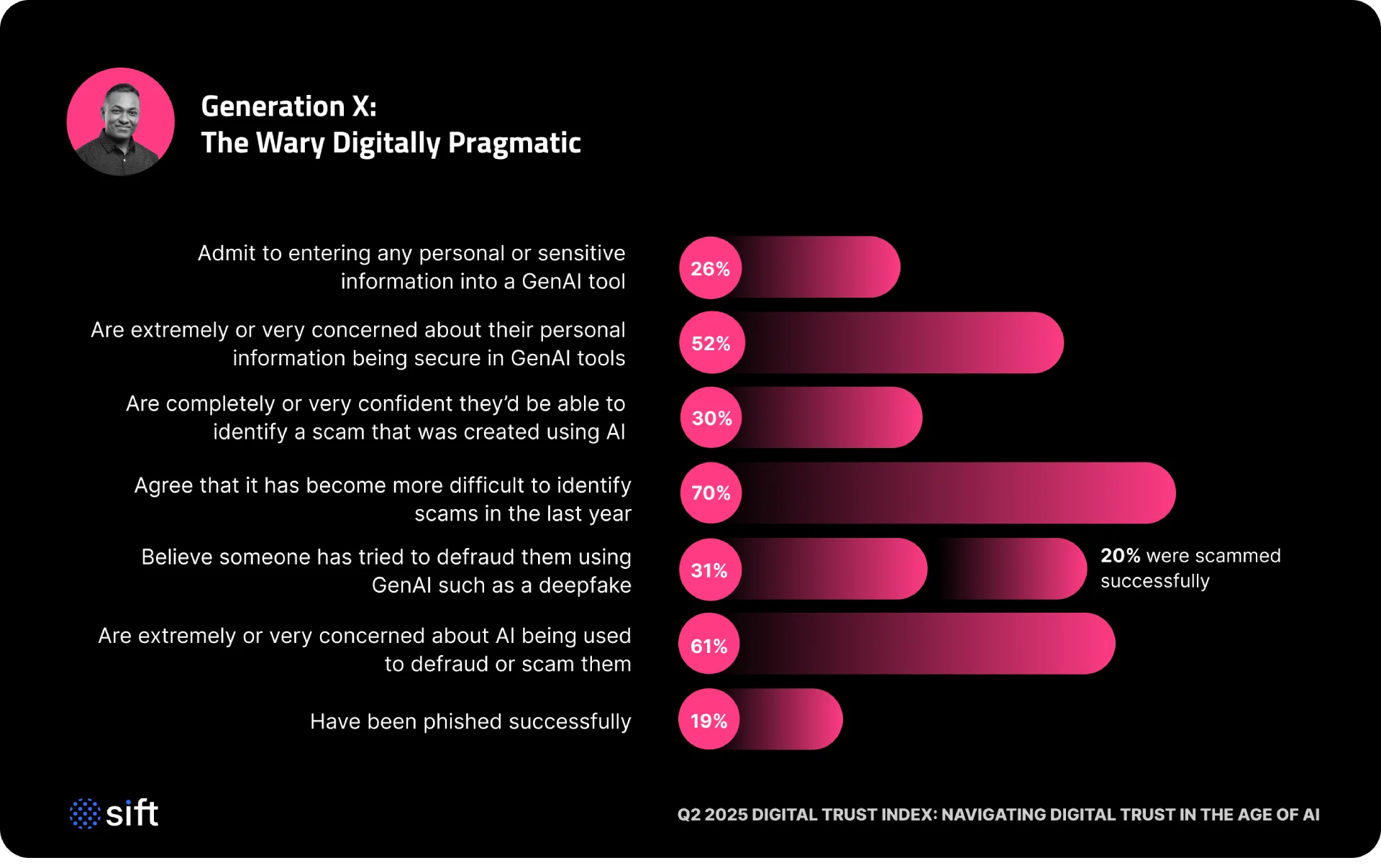

As generative AI rapidly advances, threats are evolving faster than most solutions can adapt.

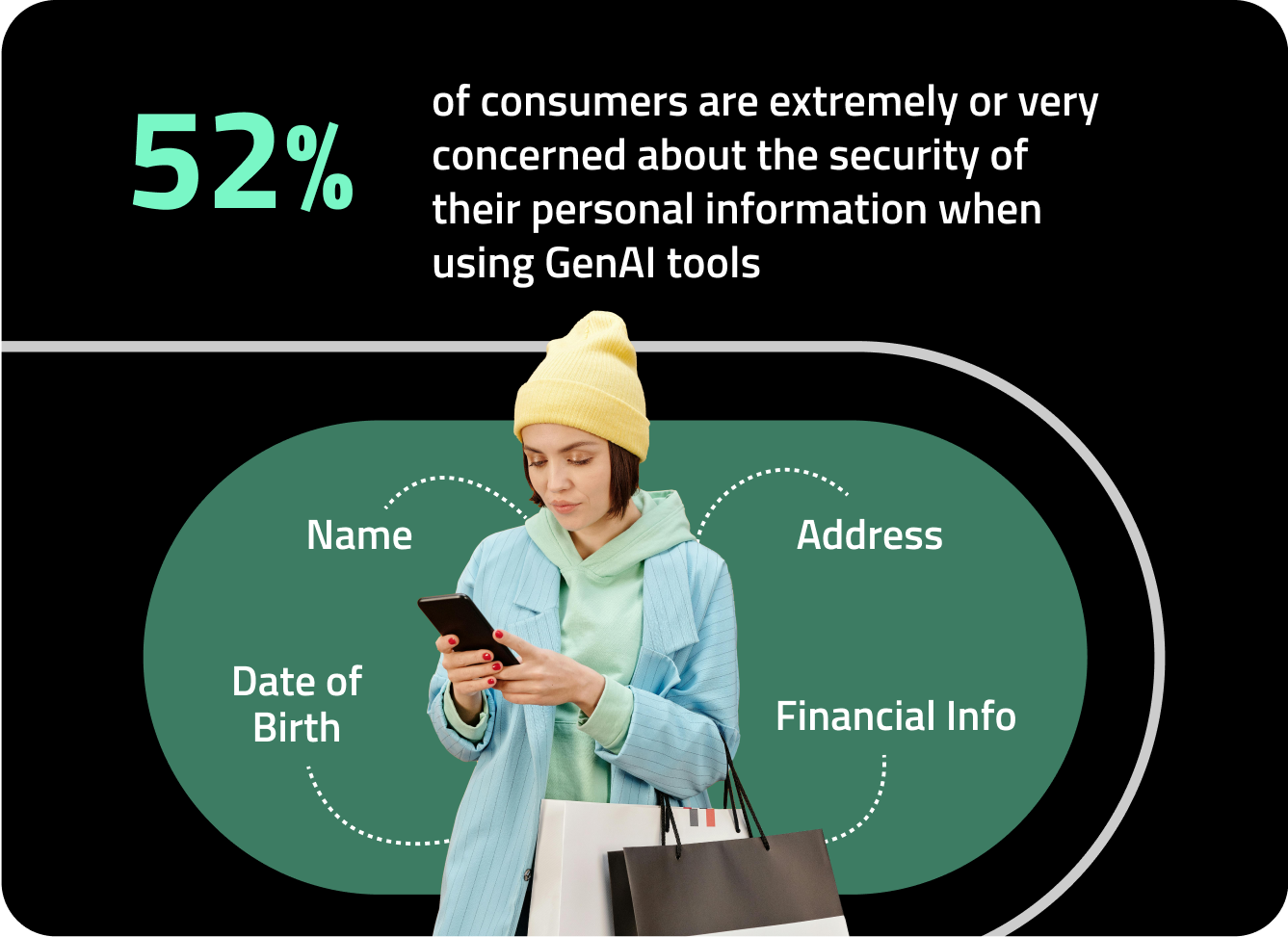

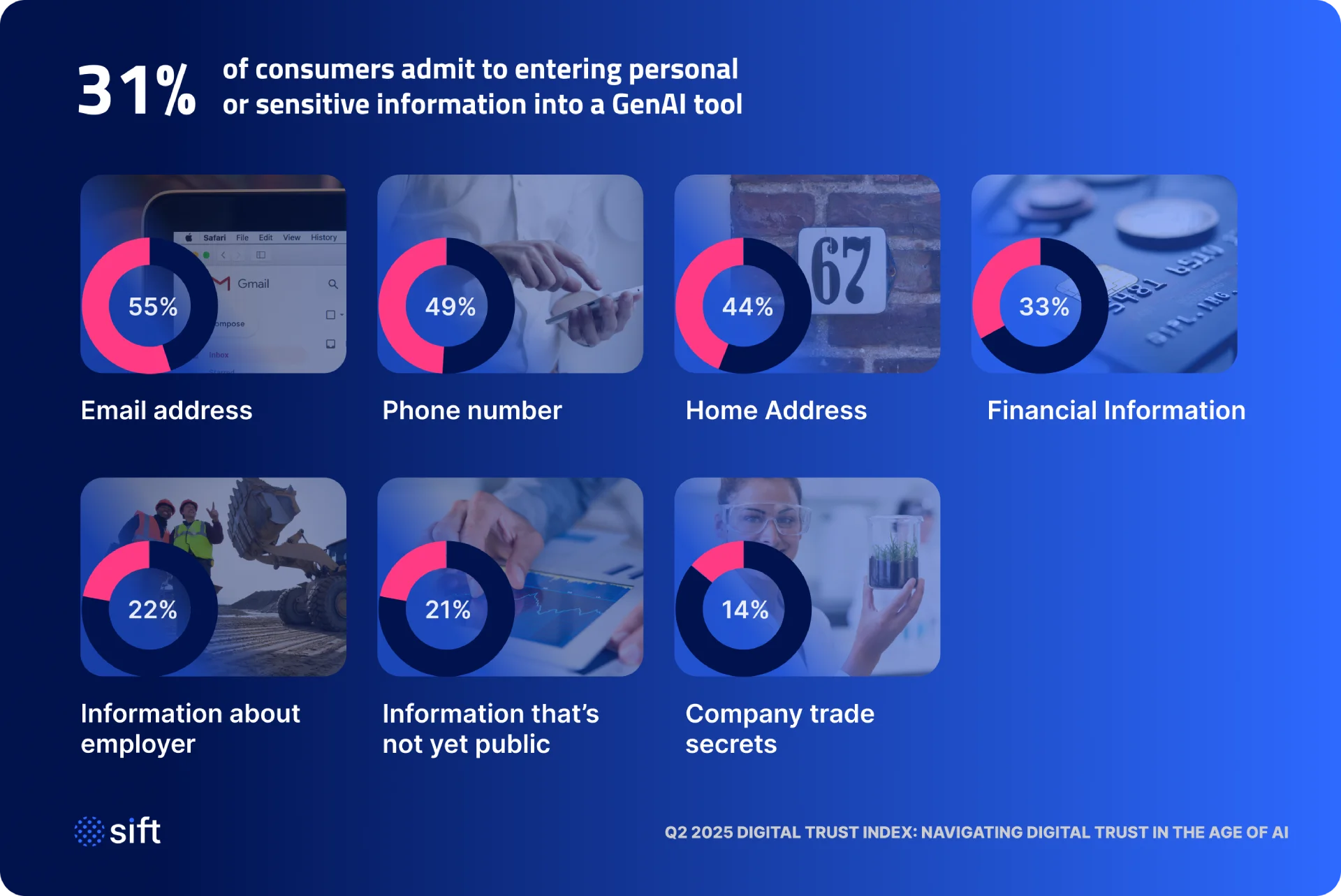

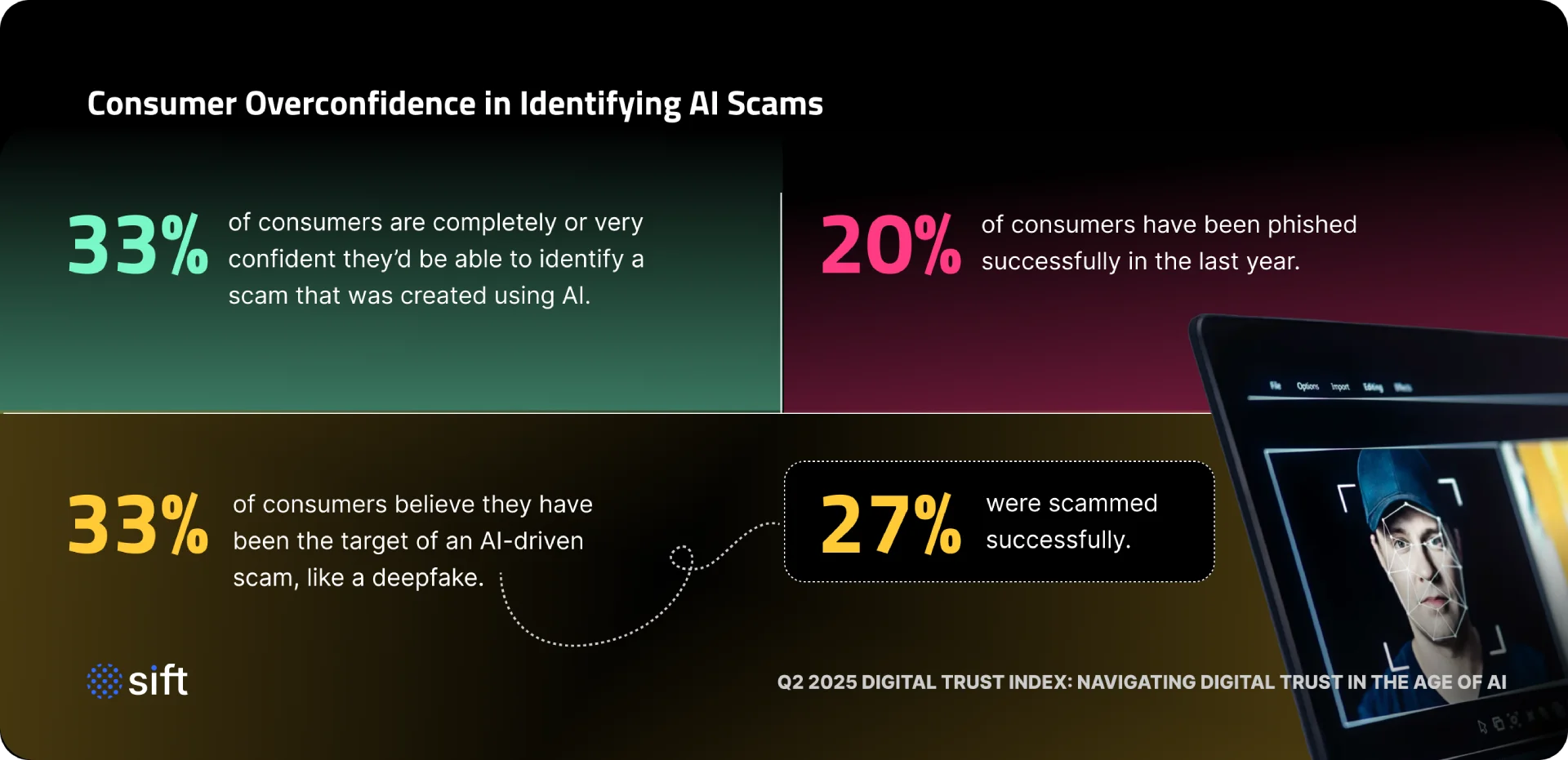

AI-driven fraud is getting sharper, making it trickier to distinguish between trusted and malicious users. Scammers are leveraging GenAI for everything from deepfakes and voice cloning to personalized phishing and fake AI platforms that steal login credentials—all contributing to $1 trillion in global scam losses in 2024.

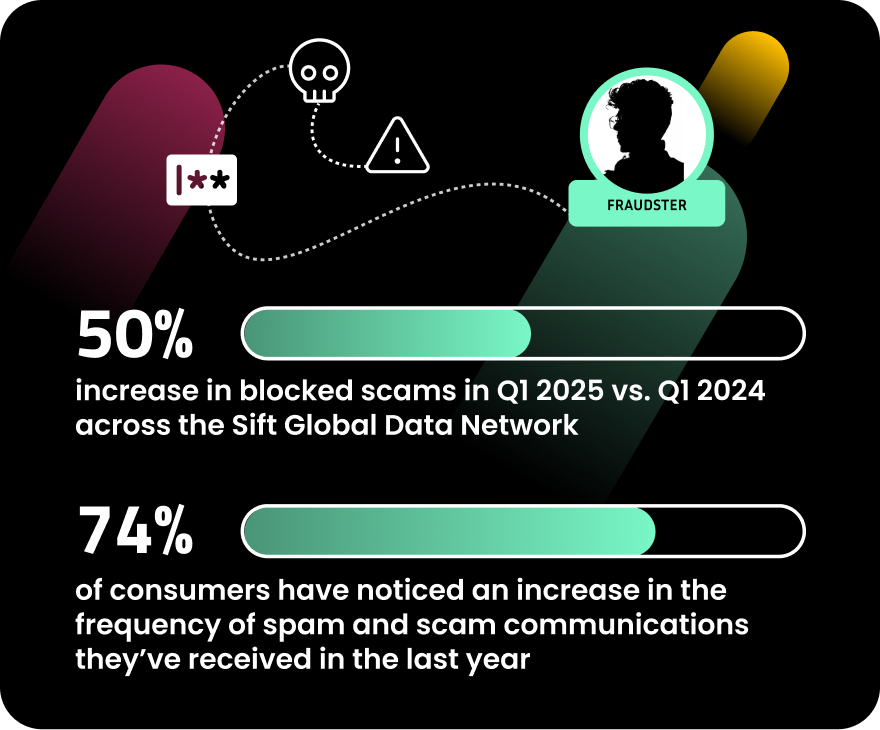

AI adoption is rising, and fraud is becoming more scalable, efficient, and compelling to those who use it. Breached personal data surged 186% in Q1 2025 and phishing reports increased 466%, driven by AI-generated phishing kits and automation. Likewise, GenAI-enabled scams rose by 456% between May 2024 and April 2025. Now, over 82% of phishing emails are created with the help of AI, allowing fraudsters to craft convincing scams up to 40% faster.